|

I am a Researcher at Shanghai AI Laboratory. I completed my PhD in Computer Science at the State University of New York (SUNY), Binghamton, in May 2024. I have been supported by grants from the Ford Motor Company for 4.5 years during my PhD studies. I also got the Academic Excellence in Computer Science (PhD) at Binghamton University. I received my M.S. in Computer Science in 2019, and got my B.S. in Mechanical Engineering in 2016 from Chongqing University, China. The master's thesis is awarded the Outstanding Thesis of Chongqing City. I am seeking interns for Embodied AI and Robotics! Feel free to contact me at yding25@binghamton.edu. |

|

|

The current research interests include:

with a particular emphasis on their applications in the context of mobile manipulators (MoMa). |

|

BestMan X reflects my vision for these robots to be the best assistants for humans.

It is a comprehensive “BestMan” world, encompassing open data, code, simulators, hardware, and my aspirations. For more information, please visit [Link]. My team has multiple robots. Therefore, we are developing an open-source robotic tool called BestMan. This tool supports development both in simulation and on real machines. By using a unified framework, BestMan facilitates rapid development, helping researchers save significant time. (Note: BestMan is still under construction.) This project encompasses various sub-projects (selected):

Xiaohongshu Channel: 444988405 WeChat Video Channel (Scan it using WeChat):

|

|

|

|

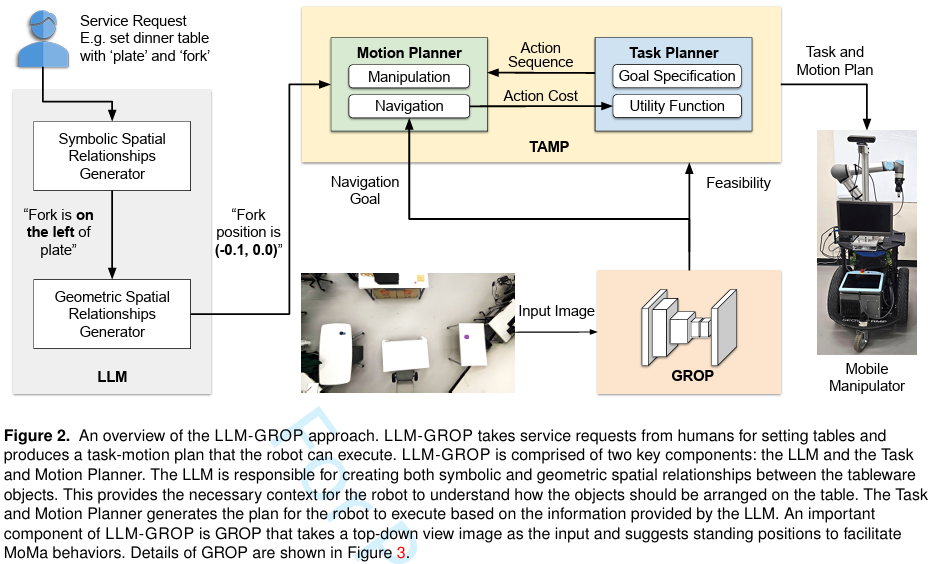

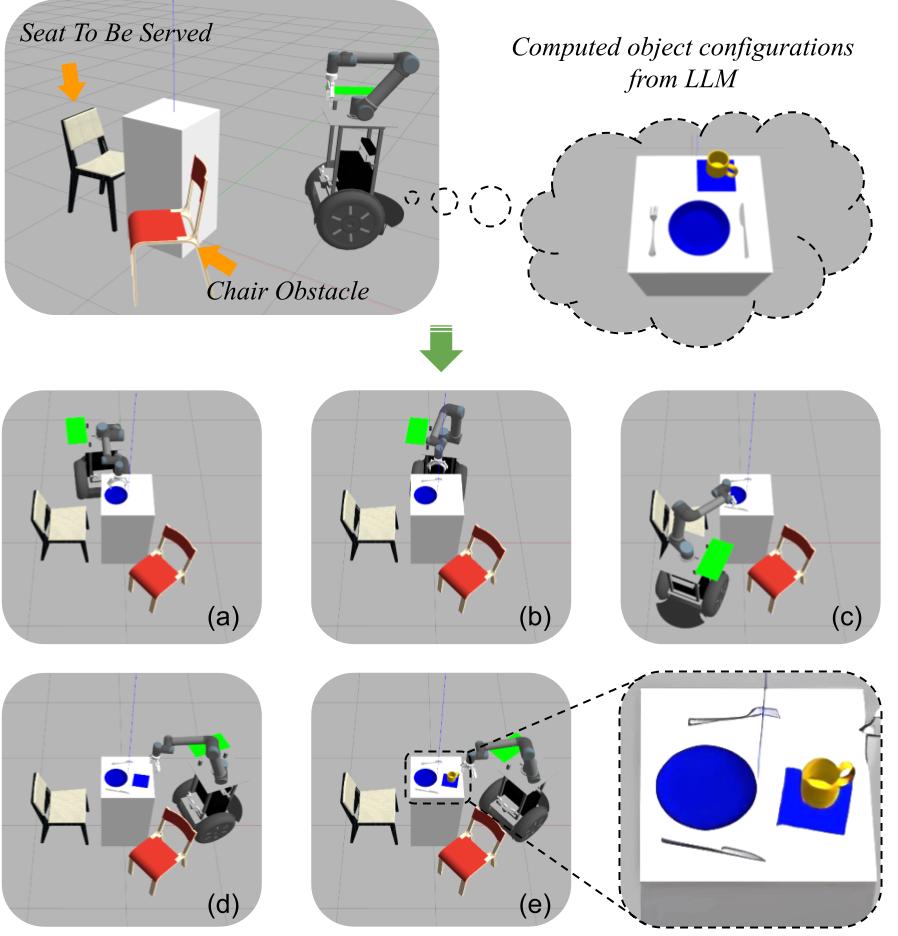

Xiaohan Zhang#,Yan Ding#,Yohei Hayamizu, Zainab Altaweel, Yifeng Zhu, Yuke Zhu, Peter Stone,Chris Paxton, Shiqi Zhang IJRR 2025 [Paper] [Project] LLM-GROP is a method that uses prompting to extract commonsense knowledge about object configurations from a large language model and instantiates them with a task and motion planner, allowing for successful and efficient multi-object rearrangement in various environments using a mobile manipulator. |

|

Pingrui Zhang1,2*, Xianqiang Gao2,3*, Yuhan Wu3,Kehui Liu2,4, Dong Wang2, Zhigang Wang2, Bin Zhao2,4, Yan Ding#, Xuelong Li5 Under Review [Paper] [Project] We present MoMa-Kitchen, a benchmark dataset with over 100k auto-generated samples featuring affordance-grounded manipulation positions and egocentric RGB-D data, and propose NavAff, a lightweight model that learns optimal navigation termination for seamless manipulation transitions. Our approach generalizes across diverse robotic platforms and arm configurations, addressing the critical gap between navigation proximity and manipulation readiness in mobile manipulation. |

|

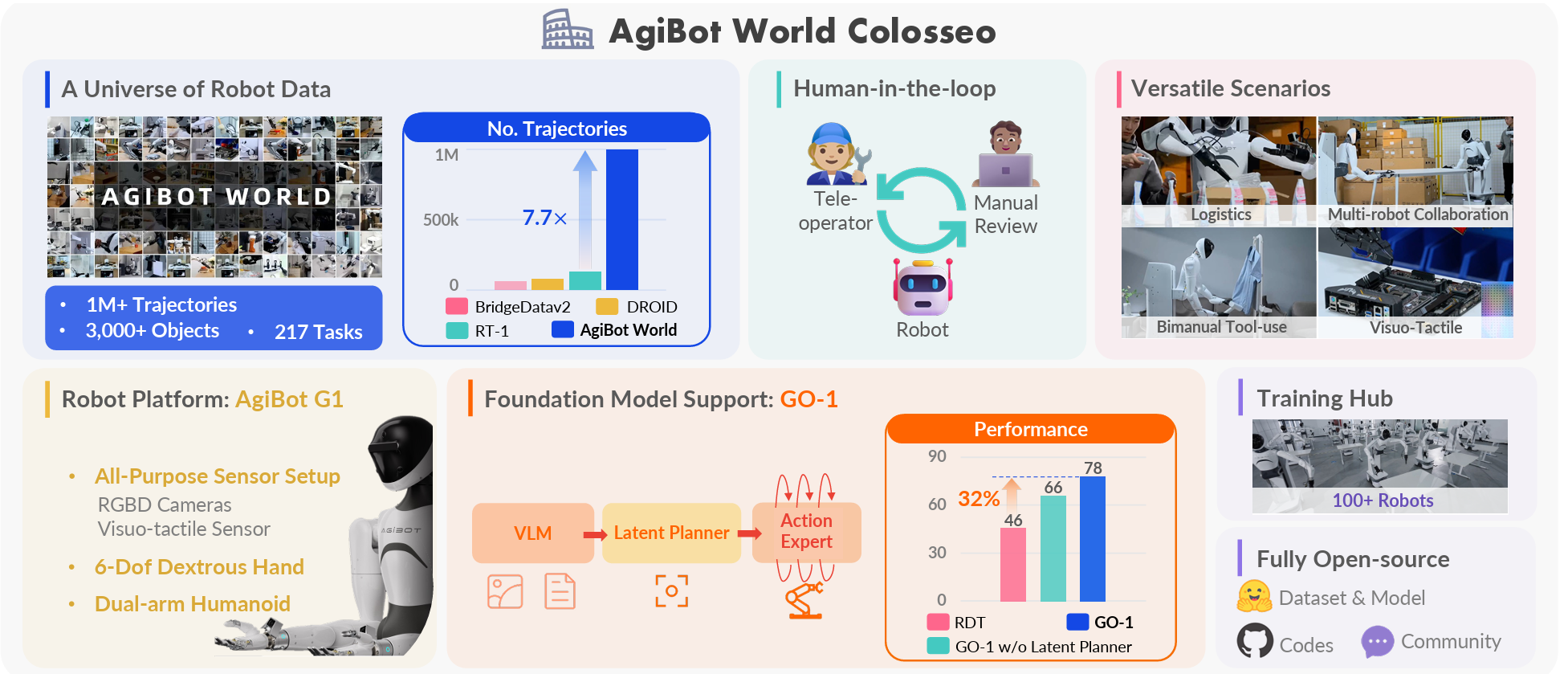

Team AgiBot-World Under Review [Paper] [Project] Introducing AgiBot World Colosseo, an open-sourced large-scale manipulation platform comprising data, models, benchmarks and ecosystem. AgiBot World stands out for its unparalleled scale and diversity compared to prior counterparts. A suite of 100 dual-arm humanoid robots is deployed. We further propose a generalist policy (GO-1) with the latent action planner. It is trained across diverse data corpus with a scalable performance of 32% gain compared to prior arts. |

|

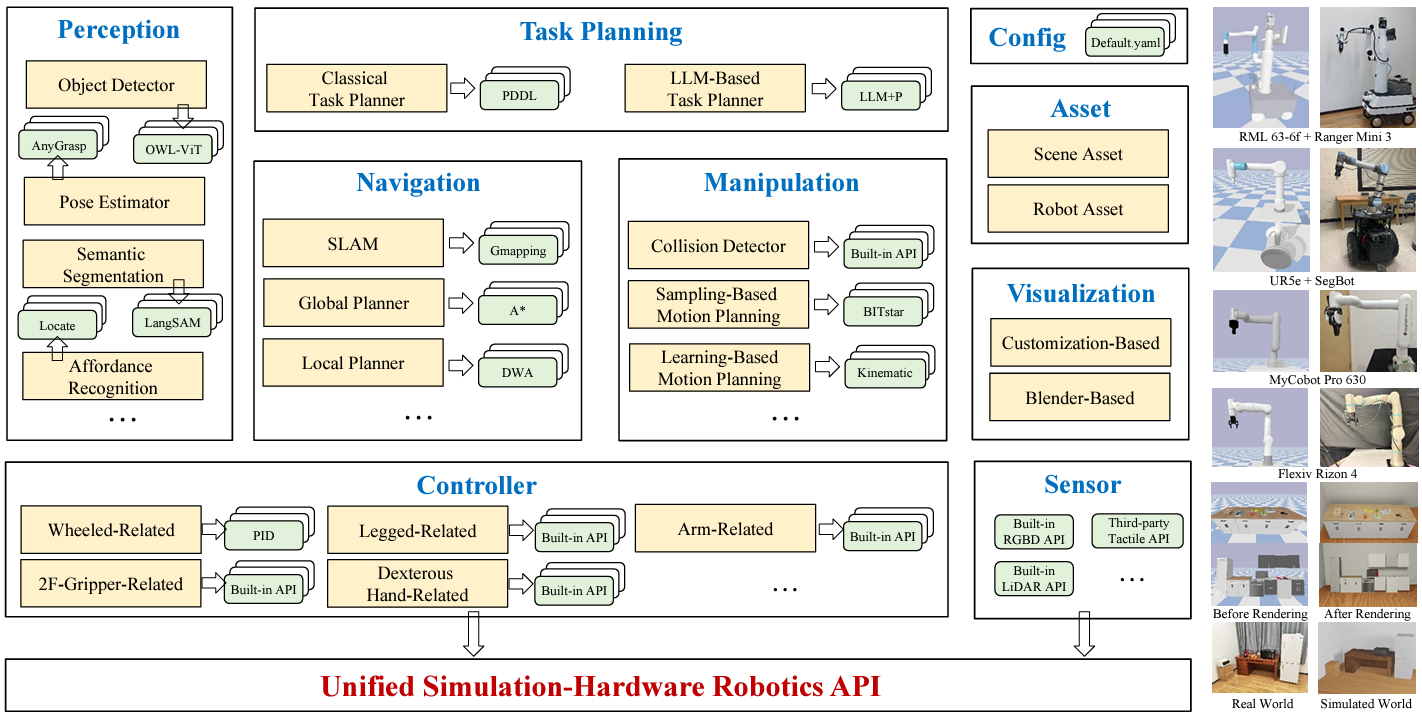

Kui Yang*, Nieqing Cao*, Yan Ding#, Chao Chen# FCS Letter [Paper] [Project] To address these challenges, we develop the BestMan platform based on the PyBullet simulator, with the following key contributions: 1) Integrated Multilevel Skill Chain to Address Multilevel Technical Complexity; 2) Highly Modular Design for Expandability and Algorithm Integration; 3)Unified Interfaces for Simulation and Real Devices to Address Interface Heterogeneity; 4)Decoupling Software from Hardware to Address Hardware Diversity. |

|

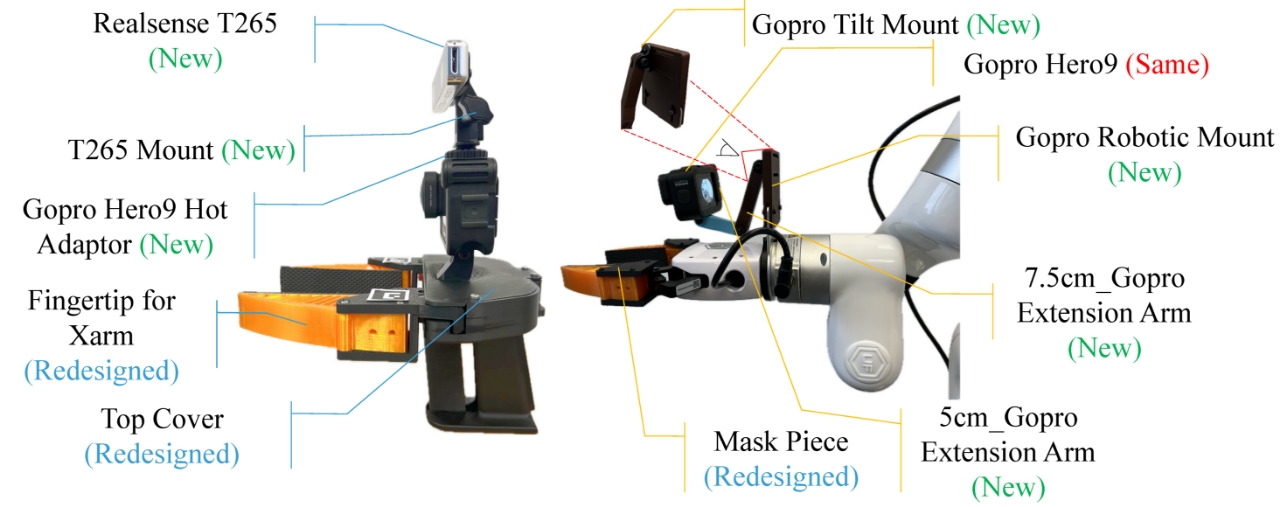

Zhaxizhuoma*, Kehui Liu*, Chuyue Guan*, Zhongjie Jia*, Ziniu Wu*, Xin Liu*, Tianyu Wang**, Shuai Liang**, Pengan Chen**, Pingrui Zhang**, Haoming Song, Delin Qu, Dong Wang, Zhigang Wang, Nieqing Cao, Yan Ding*#, Bin Zhao#, Xuelong Li Under Review [Paper] [Project] In this work, we introduce Fast-UMI, an interface-mediated manipulation system comprising two key components: a handheld device operated by humans for data collection and a robot-mounted device used during policy inference. This system offers an efficient and user-friendly tool for robotic learning data acquisition. |

|

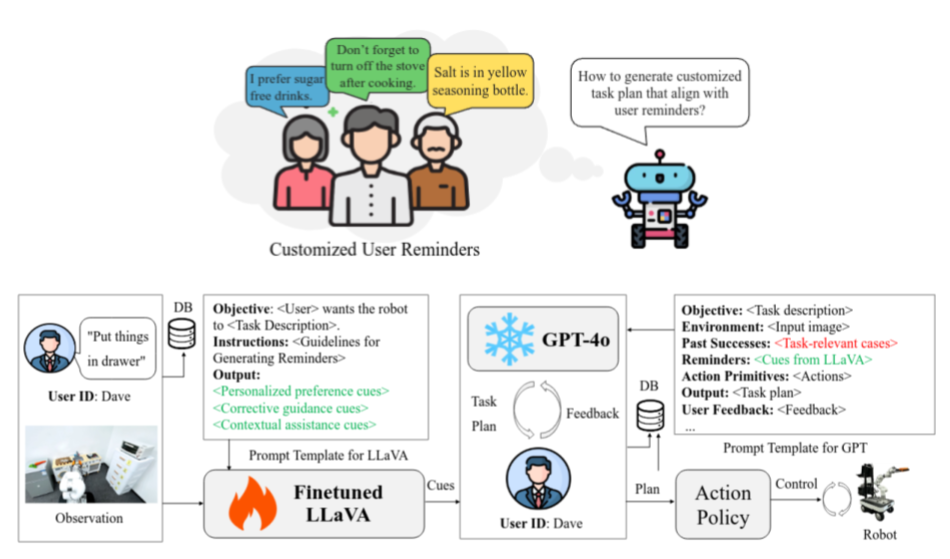

Zhaxizhuoma*, Pengan Chen*, Ziniu Wu*, Jiawei Sun, Dong Wang, Peng Zhou, Nieqing Cao, Yan Ding#, Bin Zhao, Xuelong Li ICRA 2025 [Paper] [Project] This paper presents AlignBot, a novel framework designed to optimize VLM-powered customized task planning for household robots by effectively aligning with user reminders. AlignBot employs a fine-tuned LLaVA-7B model, functioning as an adapter for GPT-4o. This adapter model internalizes diverse forms of user reminders-such as personalized preferences, corrective guidance, and contextual assistance into structured instruction-formatted cues that prompt GPT-4o in generating customized task plans. |

|

Xiaohan Zhang, Zainab Altaweel, Yohei Hayamizu, Yan Ding, Saeid Amiri, Hao Yang, Andy Kaminski, Chad Esselink, Shiqi Zhang Under Review [Paper] |

|

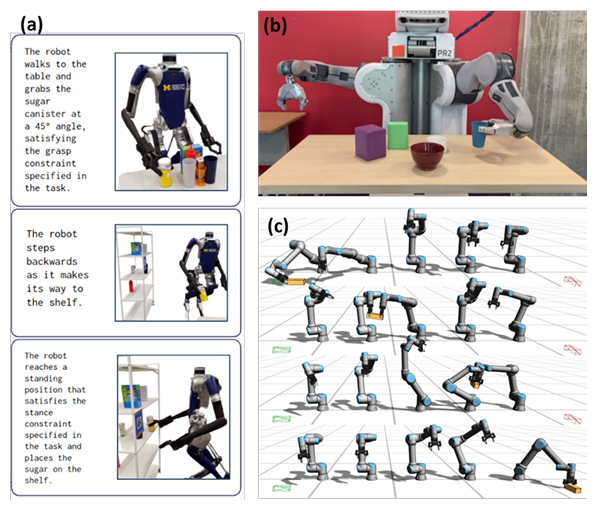

Zhigen Zhao, Shuo Chen, Yan Ding, Ziyi Zhou, Shiqi Zhang, Danfei Xu, Ye Zhao IEEE/ASME Transactions on Mechatronics, 2024 [Paper] |

|

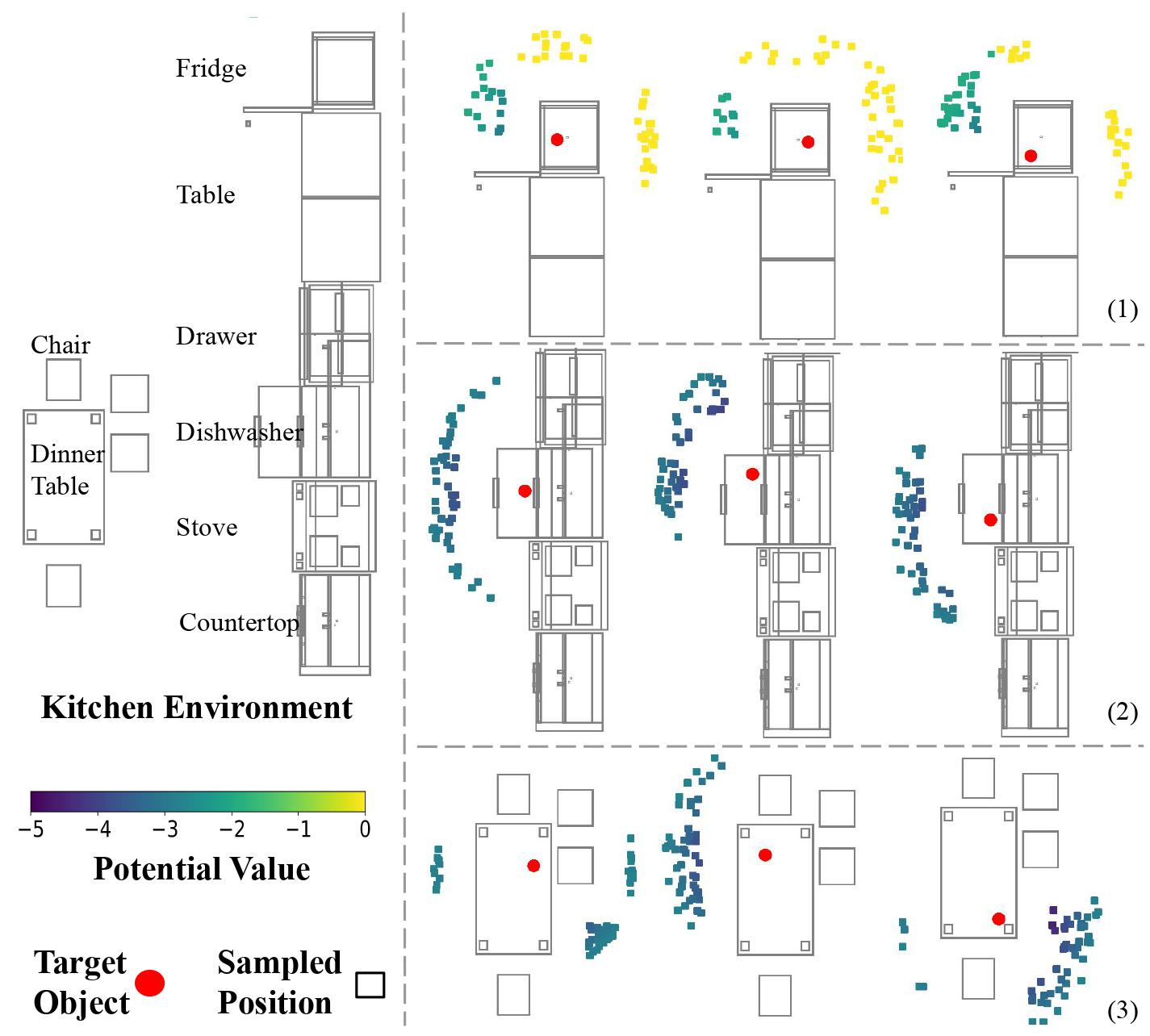

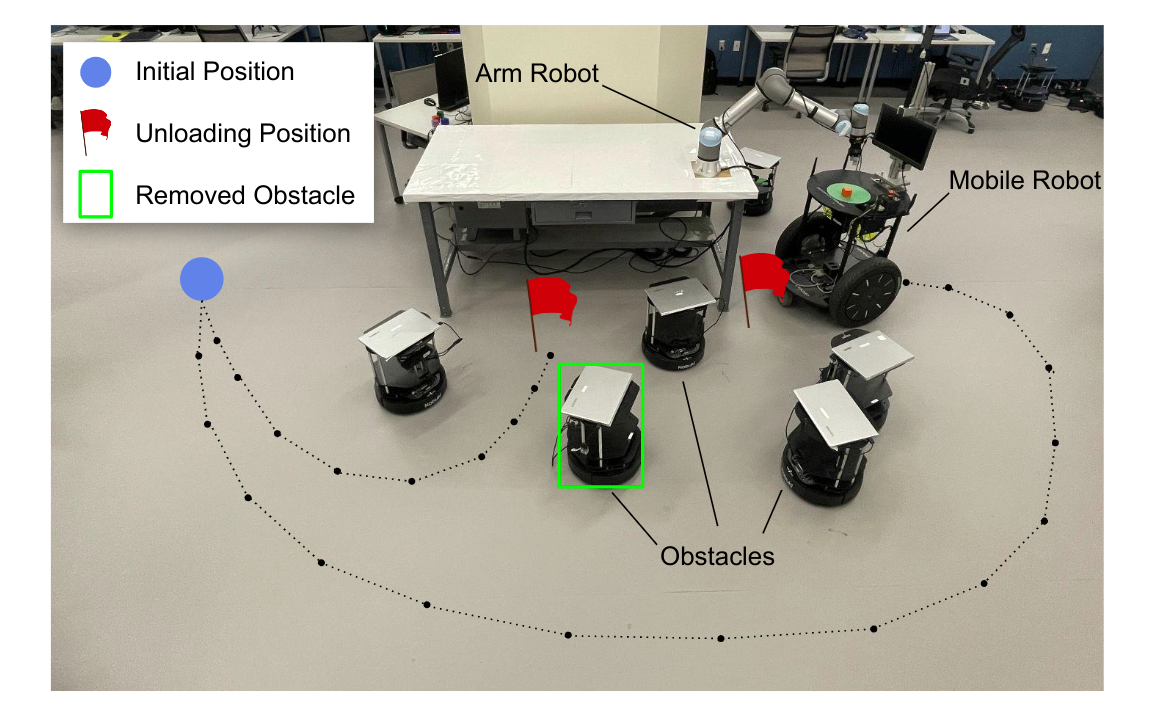

Beichen Shao*, Yan Ding*#, Xingchen Wang, Xuefeng Xie, Fuqiang Gu, Jun Luo, Chao Chen# Under Review [Paper] [Project] Mobile manipulators always need to determine feasible base positions prior to carrying out navigation-manipulation tasks. Real-world environments are often cluttered with various furniture, obstacles, and dozens of other objects. Efficiently computing base positions poses a challenge. In this work, we introduce a framework named MoMa-Pos to address this issue. |

|

Yan Ding, Xiaohan Zhang, Chris Paxton, Shiqi Zhang International Conference on Intelligent Robots and Systems (IROS), 2023 [Paper] [Project] LLM-GROP is a method that uses prompting to extract commonsense knowledge about object configurations from a large language model and instantiates them with a task and motion planner, allowing for successful and efficient multi-object rearrangement in various environments using a mobile manipulator. |

|

Chelsea Zou, Kishan Chandan, Yan Ding, Shiqi Zhang ICRA Workshop on CoPerception: Collaborative Perception and Learning, 2023 [Paper] |

|

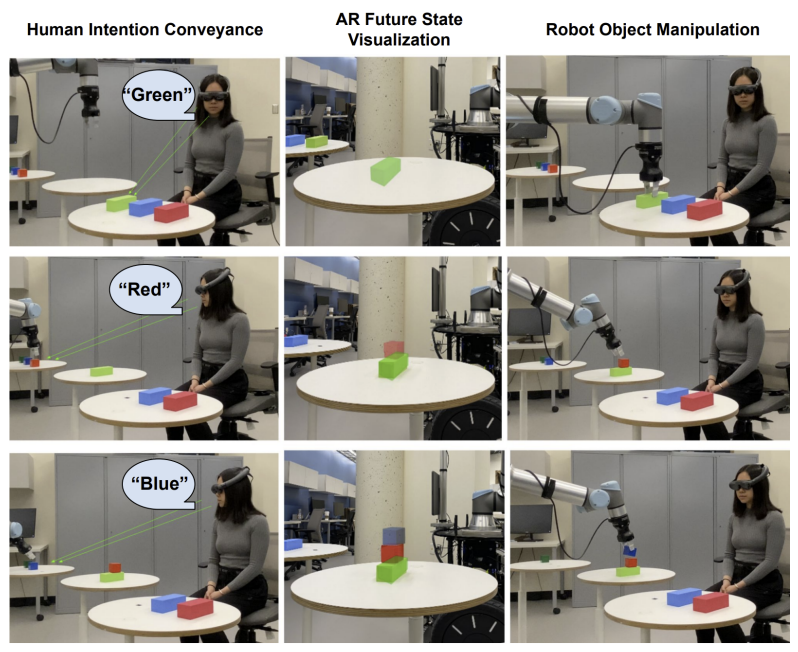

Xiaohan Zhang, Yifeng Zhu, Yan Ding, Yuqian Jiang, Yuke Zhu, Peter Stone, and Shiqi Zhang International Conference on Intelligent Robots and Systems (IROS), 2023 [Paper] |

|

Cheng Cui, Saeid Amiri, Yan Ding, Xingyue Zhan, Shiqi Zhang The Conference on Uncertainty in Artificial Intelligence (UAI), 2023 [Paper] |

|

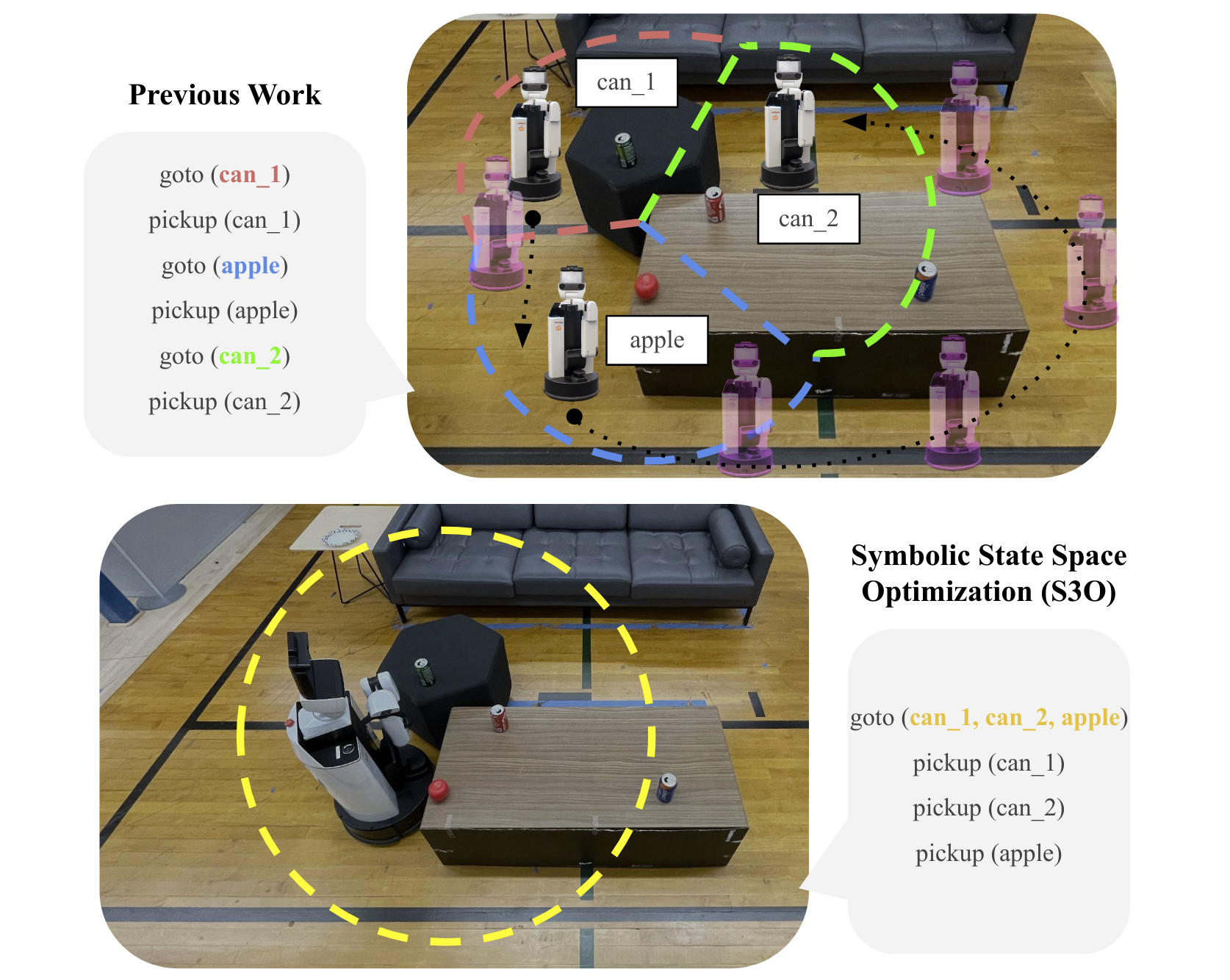

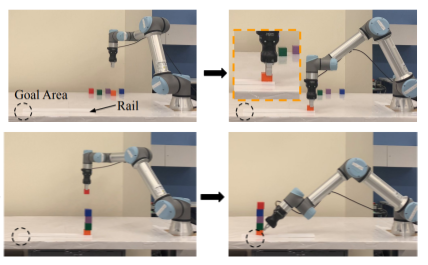

Kai Gao*, Zhaxizhuoma*, Yan Ding, Shiqi Zhang, Jingjin Yu ICRA 2025 [Paper] In this research, we propose ORLA*, which leverages delayed (lazy) evaluation in searching for a high-quality object pick and place sequence that considers both end-effector and mobile robot base travel. |

|

Xiaohan Zhang, Yan Ding, Saeid Amiri, Hao Yang, Andy Kaminski, Chad Esselink, and Shiqi Zhang ICRA Workshop on Robot Execution Failures and Failure Management Strategies, 2023 [Paper] |

|

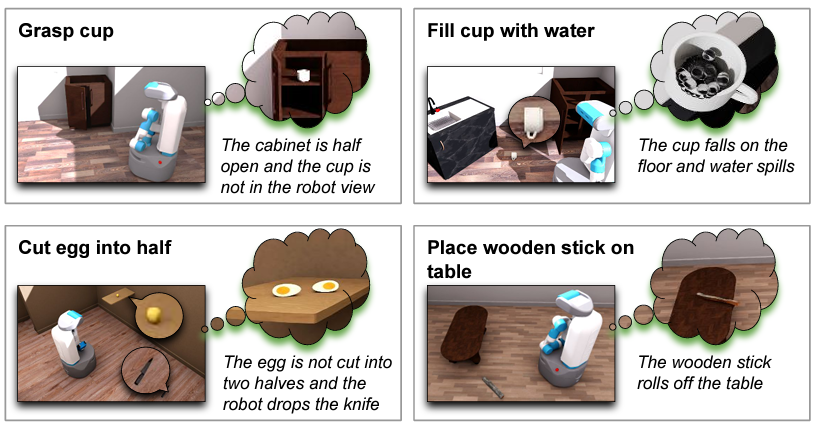

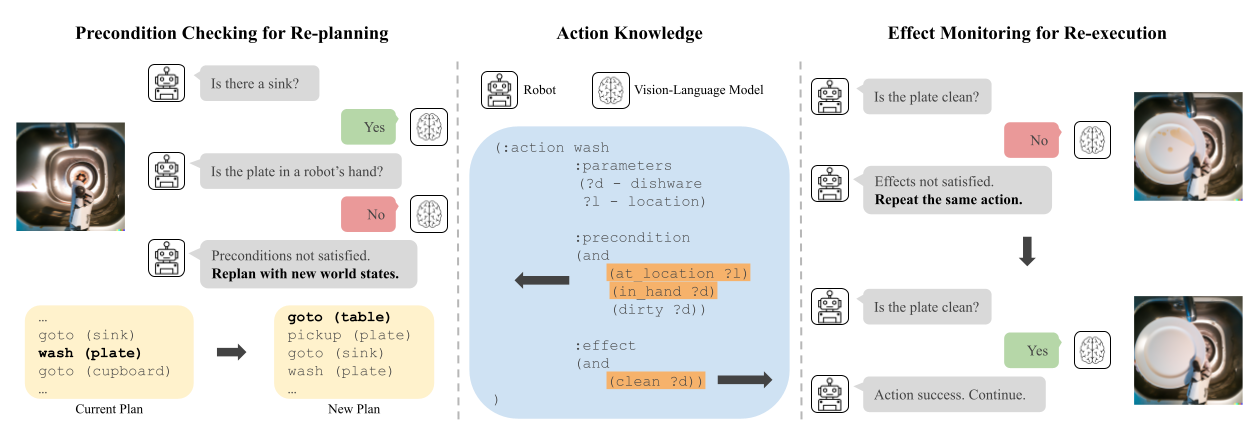

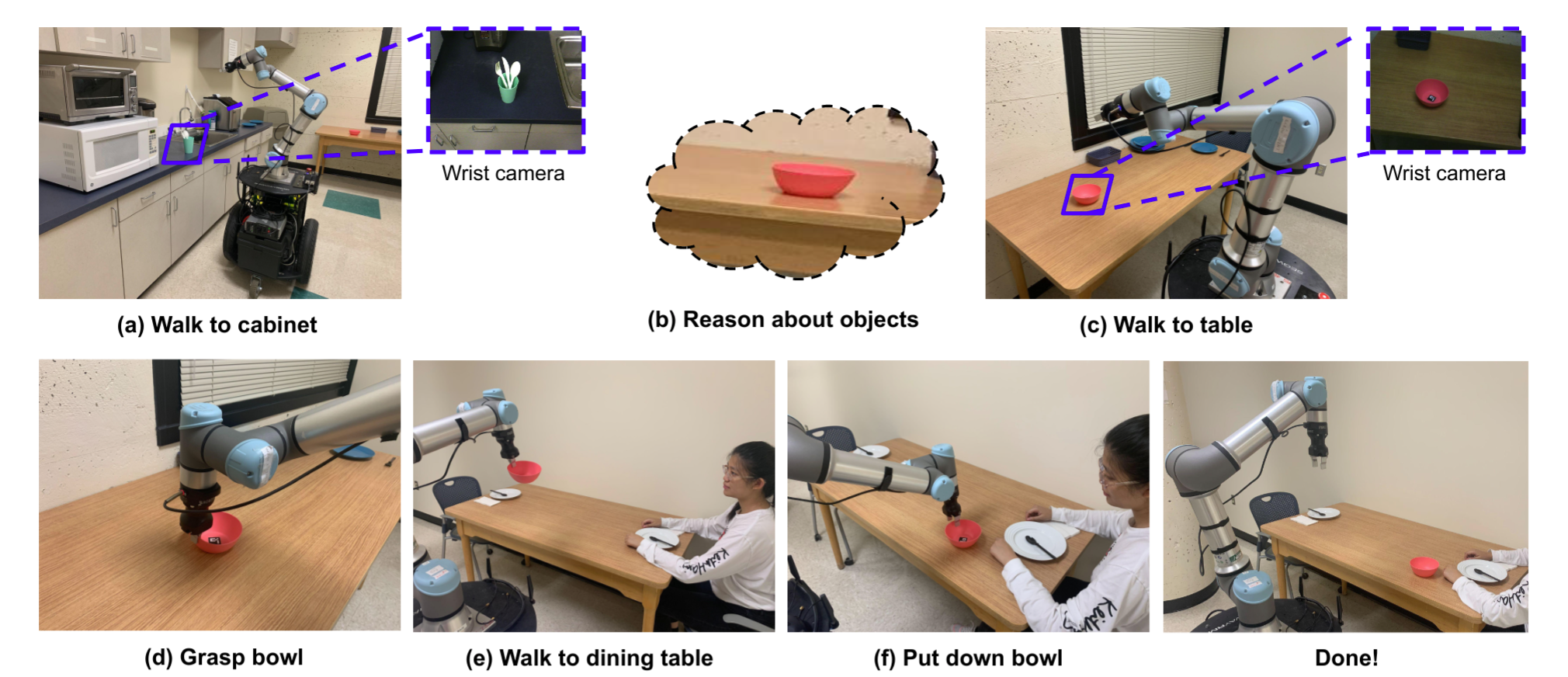

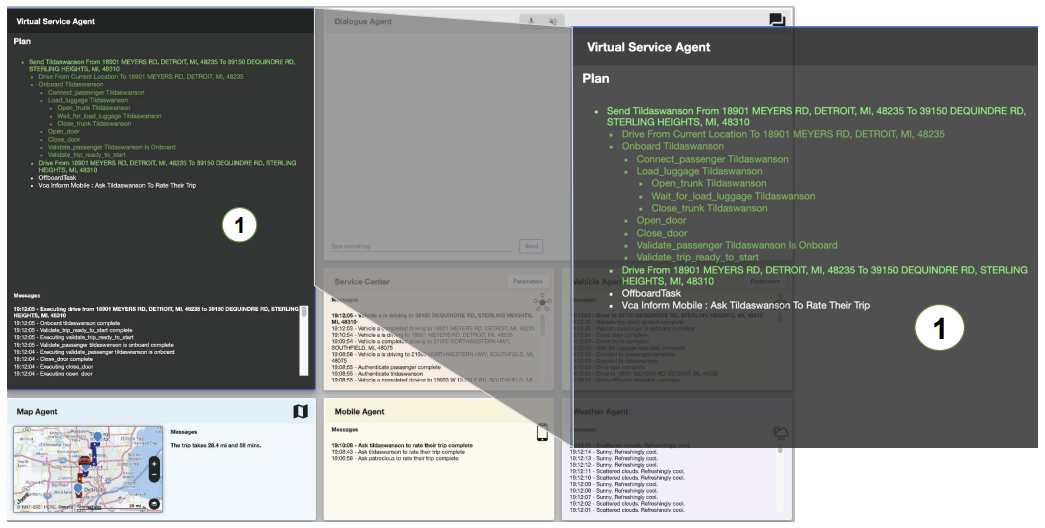

Yan Ding, Xiaohan Zhang, Saeid Amiri, Nieqing Cao, Hao Yang, Chad Esselink, Shiqi Zhang Autonomous Robots (accepted) [Paper] [Project] [Video] [Code] The paper introduces a new algorithm (COWP) that uses task-oriented common sense extracted from Large Language Models to help robots handle unforeseen situations and complete complex tasks in an open world, with better success rates than previous algorithms. |

|

Yan Ding, Xiaohan Zhang, Xingyue Zhan, Shiqi Zhang IEEE Robotics and Automation Letters (RA-L), 2022 [Paper] [Project] [Code] [Presentation] The paper presents a new robot planning algorithm, TMOC, which can handle complex real-world scenarios without prior knowledge of object properties by learning them through a physics engine, outperforming existing algorithms. |

|

Xiaohan Zhang, Yifeng Zhu, Yan Ding, Yuke Zhu, Peter Stone, and Shiqi Zhang International Conference on Robotics and Automation (ICRA), 2022 [Paper] [Project] |

|

Hao Yang, Tavan Eftekhar, Chad Esselink, Yan Ding, Shiqi Zhang International Conference on Case-Based Reasoning (ICCBR), 2021 [Paper] |

|

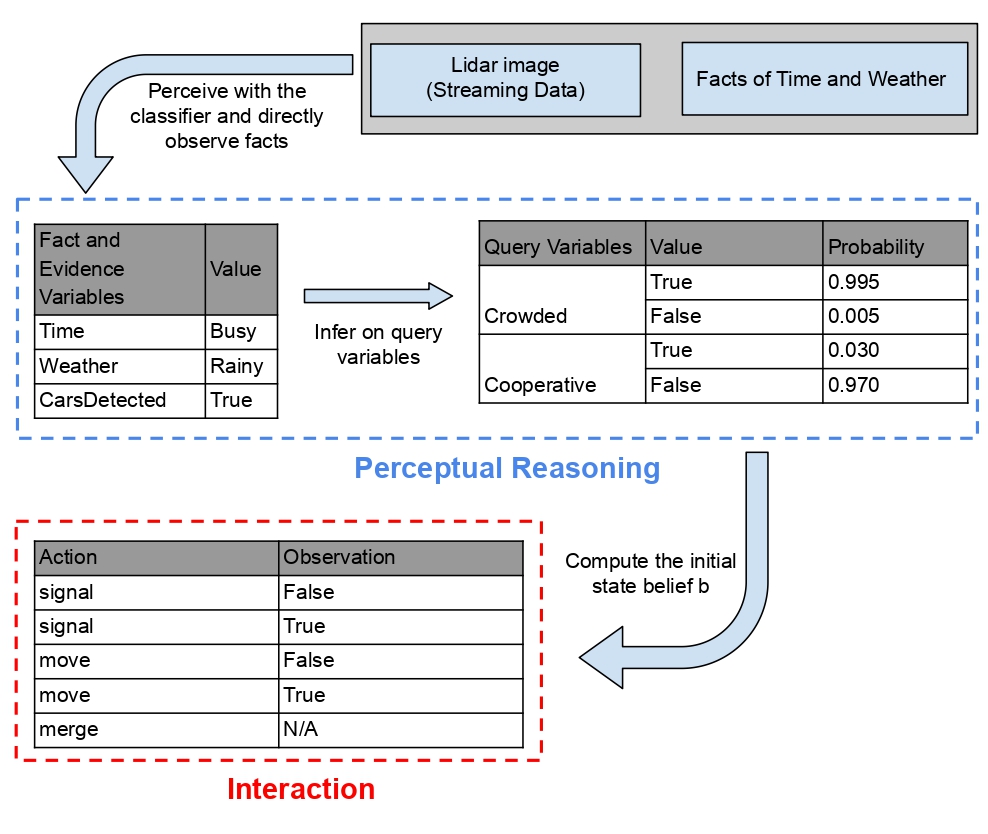

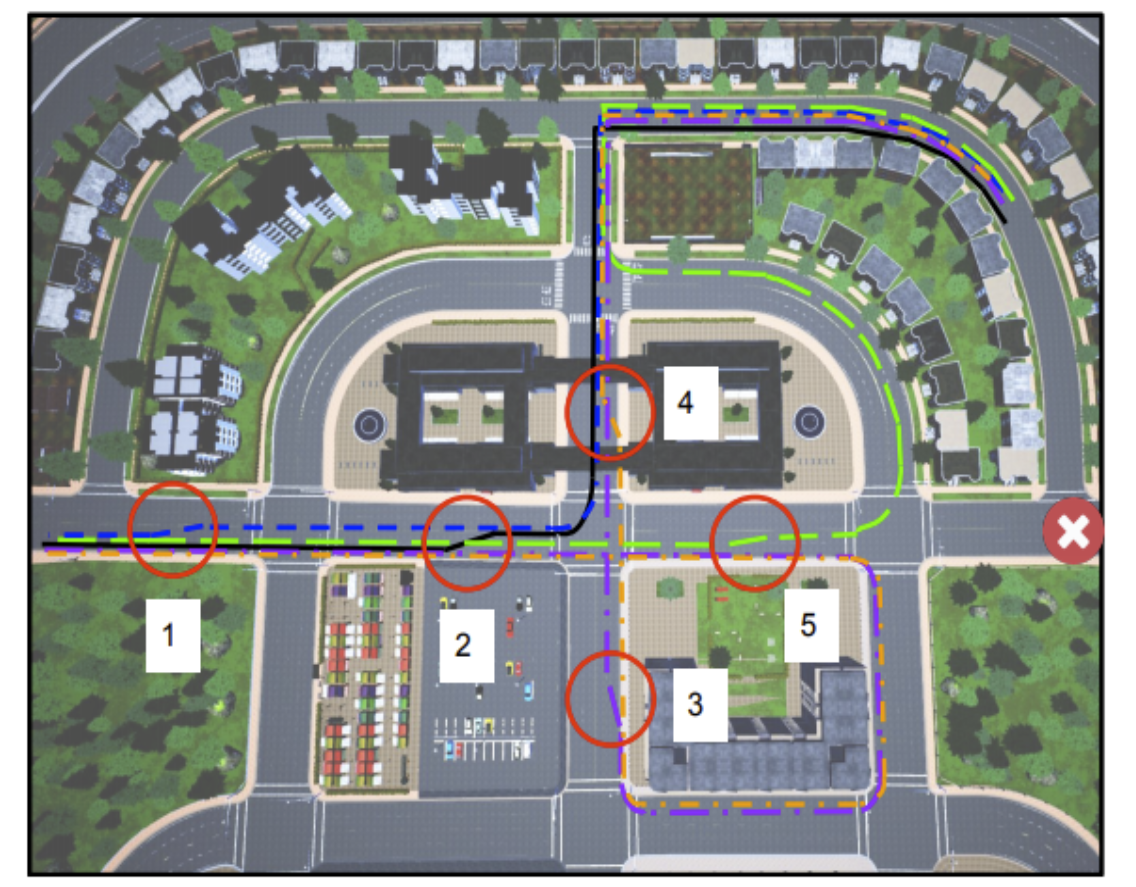

Yan Ding, Xiaohan Zhang, Xingyue Zhan, Shiqi Zhang International Conference on Intelligent Robots and Systems (IROS), 2020. [Paper] [Project] [Code] [Demo] [Presentation] Autonomous vehicles need to balance efficiency and safety when planning tasks and motions, and the algorithm Task-Motion Planning for Urban Driving (TMPUD) enables communication between planners for optimal performance. |

|

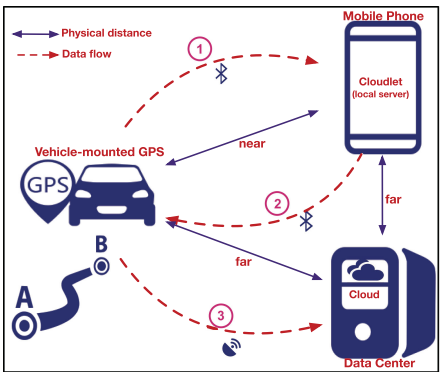

Chao Chen*, Yan Ding*, Suiming Guo, Yasha Wang IEEE TVT, 2020. [PDF] DAVT proposes a mobile edge computing solution for vehicle trajectory data compression, which reduces data at the source and lowers communication and storage costs, using three compressors for distance, acceleration, velocity, and time data parts, and outperforms other baselines according to evaluation results. |

|

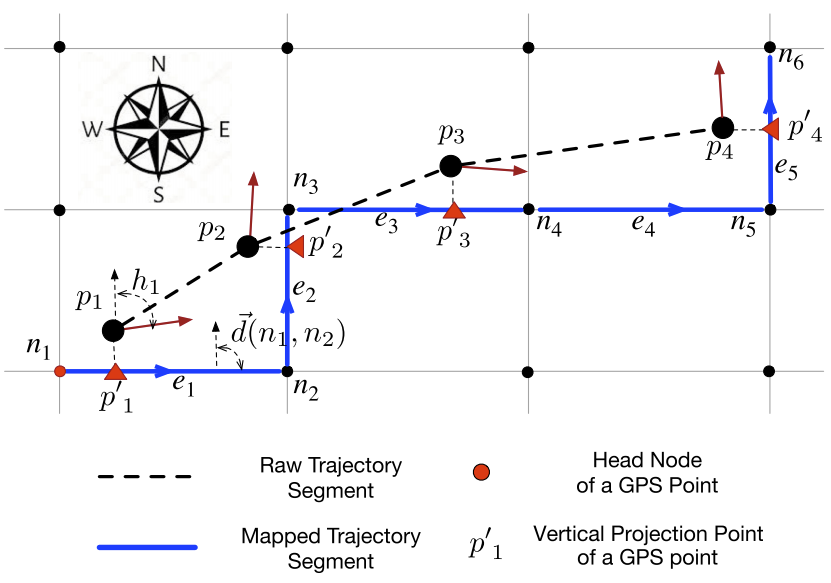

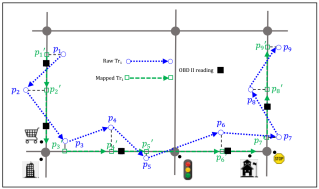

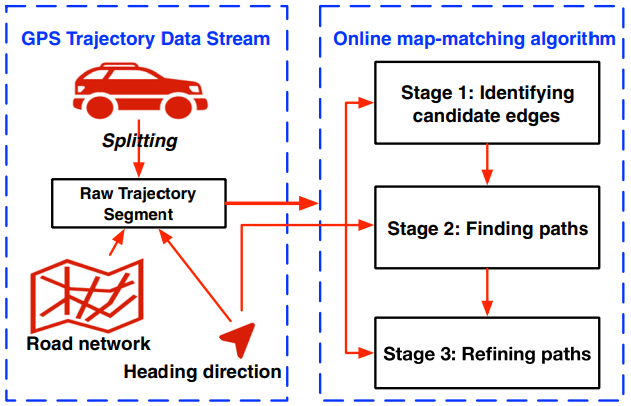

Chao Chen*, Yan Ding*, Zhu Wang, Junfeng Zhao, Bin Guo, Daqing Zhang IEEE Systems Journal, 2019. [PDF] This paper proposes an online trajectory compression framework that uses SD-Matching for GPS alignment and HCC for compression, and demonstrates its effectiveness and efficiency using real-world datasets in Beijing and deployment in Chongqing. |

|

Chao Chen*, Yan Ding*, Xuefeng Xie, Shu Zhang, Zhu Wang, Liang Feng IEEE TITS, 2019. [PDF] This paper presents an online trajectory compression framework for reducing storage, communication, and computation issues caused by massive and redundant vehicle trajectory data, consisting of two phases: online trajectory mapping and trajectory compression, using Spatial-Directional Matching and Heading Change Compression algorithms respectively, which have been evaluated with real-world datasets in Beijing and deployed in Chongqing, showing higher accuracy and efficiency compared to state-of-the-art algorithms. |

|

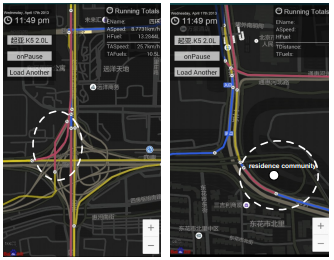

Chao Chen*, Yan Ding*, Xuefeng Xie, Xuefeng Xie, Zhikai Yang Green, Pervasive, and Cloud Computing: 13th International Conference (GPC), 2018. [PDF] This paper proposes a fuel consumption model based on GPS trajectory and OBD-II data, which can estimate the fuel usage of driving paths and help drivers choose fuel-efficient routes to reduce greenhouse gas and pollutant emissions. |

|

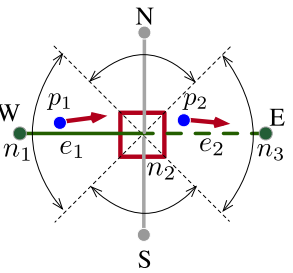

Chao Chen*, Yan Ding*, Xuefeng Xie, Shu Zhang Journal of Ambient Intelligence and Humanized Computing, 2018. [PDF] The SD-Matching algorithm proposes a three-stage approach to improve the accuracy and speed of online map-matching by incorporating vehicle heading direction data. |

|

Yan Ding*, Chao Chen*, Shu Zhang, Bin Guo, Zhiwen Yu, Yasha Wang IEEE PerCom, 2017. [PDF] Greenhouse gas emissions from vehicles in modern cities is a significant problem, but recommending fuel-efficient routes to drivers through a personalized fuel consumption model can help alleviate this issue, as demonstrated by the successful implementation of GreenPlanner in Beijing, which achieved a mean fuel consumption error of less than 7% and an average savings of 20% fuel consumption for suggested routes. |